Message Passing Decoding of LDPC Codes on Erasure Channel

We describe the message passing algorithm for achieving Bit-wise Maximum Aposterior Probability Decoding of linear codes on erasure channels.

In the experiment titled 'Maximum Likelihood (ML) Decoding of Codes on Binary Input Channels', we observed that the block-MAP estimate had a similar expression, except that we were focussed on decoding the estimating the entire transmitted codeword (or the entire 'block') according to the maximum-aposteriori probability expression for the whole codeword given the received vector . However, in this and the subsequent experiments regarding decoding of LDPC codes, we will do *bit-wise MAP decoding**.

Let be the codeword transmitted from the linear code of dimension . Let be the received vector from the channel. The bit-wise MAP estimate of the bit is based on the value of the log-likelihood ratio (LLR) of given , denoted by , and defined as follows.

The bitwise MAP estimate for is then denoted by and calculated as follows.

Note that we assume ties are broken arbitrarily, i.e., the decoder choose the estimate randomly if .

With this assumption in mind, we describe a decoding technique based on a general class of algorithms on graphs, which are known as message passing algorithms. Essentially, we run a number of rounds of passing messages from the variable nodes of the Tanner graphs to the check nodes and vice-versa. These rounds, when they complete, lead to two possibilities at which the algorithm is terminated. The first possibility is that we end with a fully decoded codeword estimate, which is also correct. The second possibility is that we end up with a partially decoded estimate, which is correct only in the decoded bits, but may have some erased coordinates which cannot be decoded by any algorithm.

In other words, this iterative procedure of message passing implements the bit-wise MAP decoding algorithm in an efficient manner, for all LDPC codes.

Before describing the algorithm, we give a short note on the motivation for this algorithm.

The connection between the bit-wise MAP algorithm and identifying compatible codeword(s)

Observe that, in the erasure channel, for a transmitted codeword the received vector is such that or , for all . The goal in decoding therefore is to reconstruct the erased symbols, as the non-erased symbols in and are identical.

It is not difficult to see that if the bit-wise MAP decoding estimate for the bit of the codeword, denoted by is be exactly the correct bit in the transmitted codeword, then among all codewords which are compatible with the received vector (i.e., those codewords which match in all unerased positions), the co-ordinate is same.

Since this must be true for all the unerased bits, it must be the case that there must be exactly one codeword, which must be the exact transmitted codeword in , if the bit-wise MAP estimate is indeed correct.

Now, if there is no such unique codeword, then, the bit-wise MAP decoder is surely unable to identify the exact codeword transmitted, in such a scenario. Thus, in this case, we cannot estimate all the codeword bits uniquely. We will have to decode only those bits which are identical in all codewords compatible to . The other coordinates may not be possible to be identified uniquely.

With these ideas in mind, we are now ready to describe the working of the message-passing algorithm.

Message Passing on the Tanner Graph for Erasure Channels

Consider the code with the parity check matrix given as follows.

Observe that any two columns of this matrix are linearly independent, so this code is capable of correcting any erasure pattern with upto two erasures. In fact, some patterns with upto three erasures can also get corrected by a block-wise MAP decoder. But here, we will focus on at most two erasures. Further, the generator matrix of this code can be obtained as %%%%

Clearly, this code has codewords. Assume that the codeword transmitted is . Let the received vector be . We see that there are three erasures here. Essentially, from the discussions in the previous section, finding the bit-wise MAP estimate in this case is the same as finding each bit which is identical in all codewords that are compatible with the received vector . Writing out the entire set of codewords, we can observe that there is infact only the one codeword, which is the same as the transmitted codeword, which has this property of being compatible with . We now present how the peeling decoder obtains this codeword systematically.

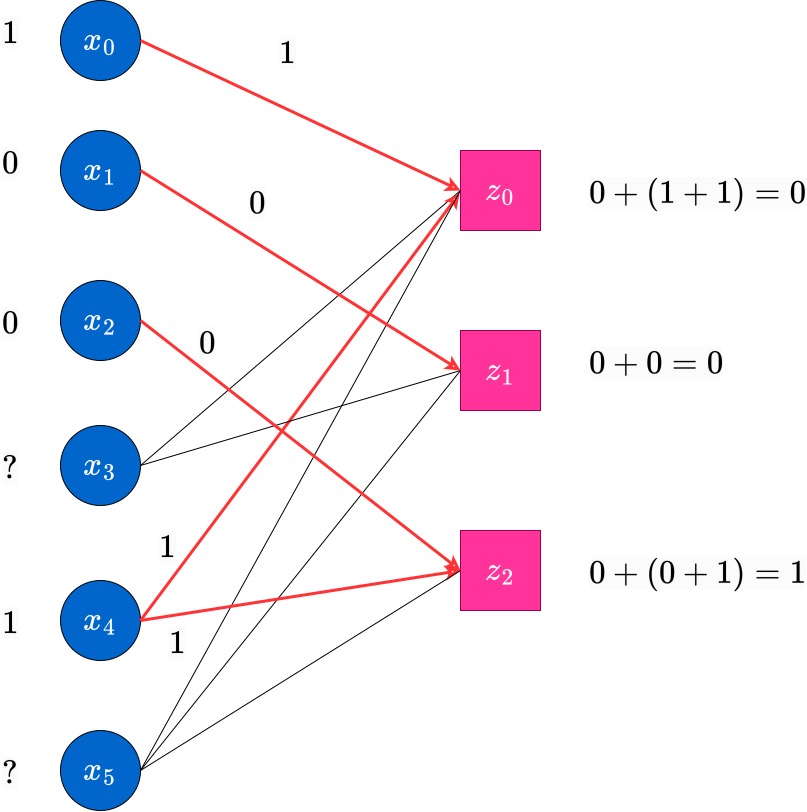

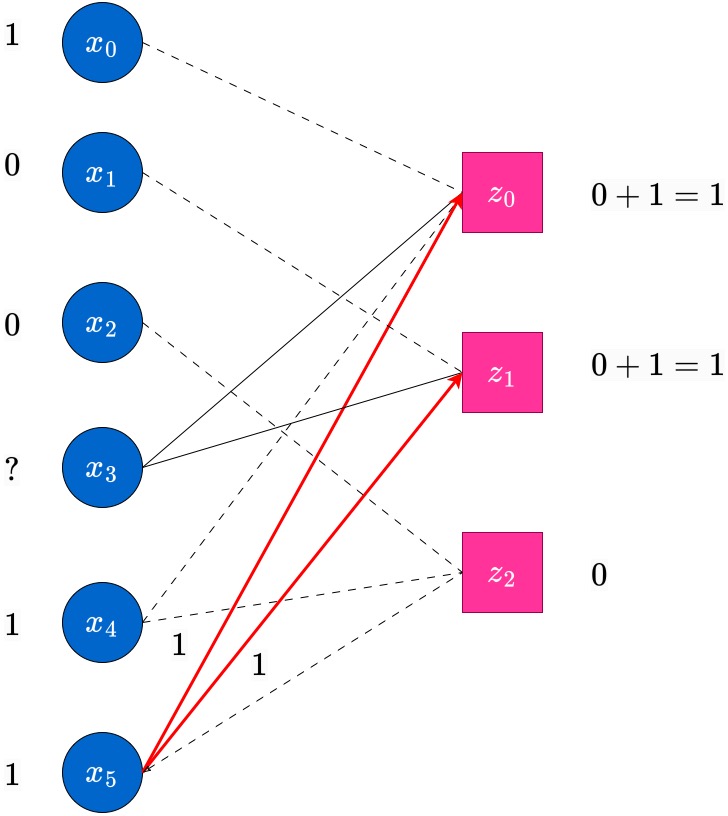

We draw the initial Tanner graph in the following figure.

.jpg)

Here, the symbols near the variable nodes denote the received bits, whereas the symbols near the check nodes denote the status of the check node. The status of the check nodes will keep changing through the iterations (essentially, what we are doing is solving a system of linear equations in a simplistic way). Also, the decoded bits (which were previously erased) will keep appearing near the variable nodes as and when they are decoded.

In the next round, messages (which are the same as the values of the variable node) are passed from each non-erased variable nodes to all check nodes it is connected to. This is indicated by the red edges with the arrow-head. Each check node which received a message in this round updates its value, as current value=previousvalue+(sum of received values), where the sum is an XOR (binary) sum. These updates along with their calculations are also shown in the following figure.

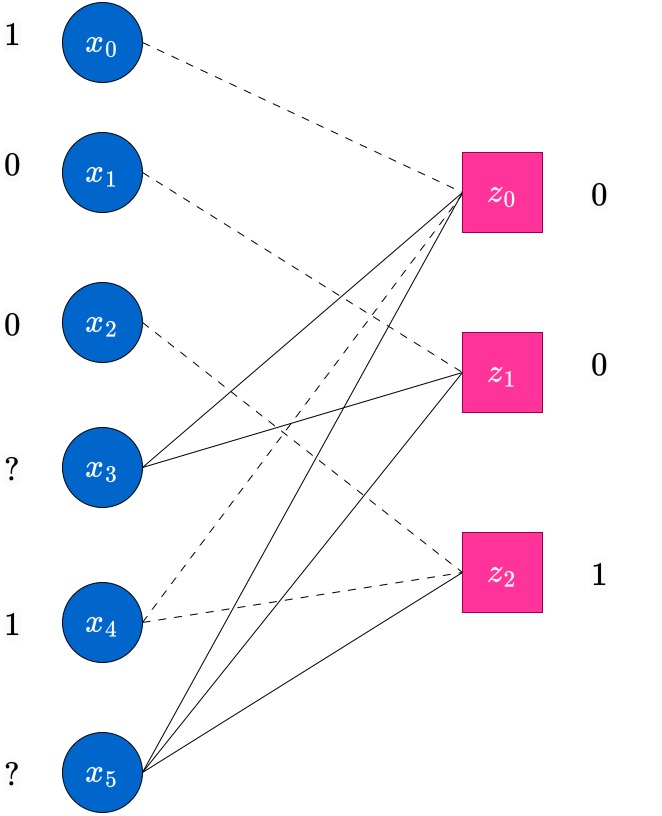

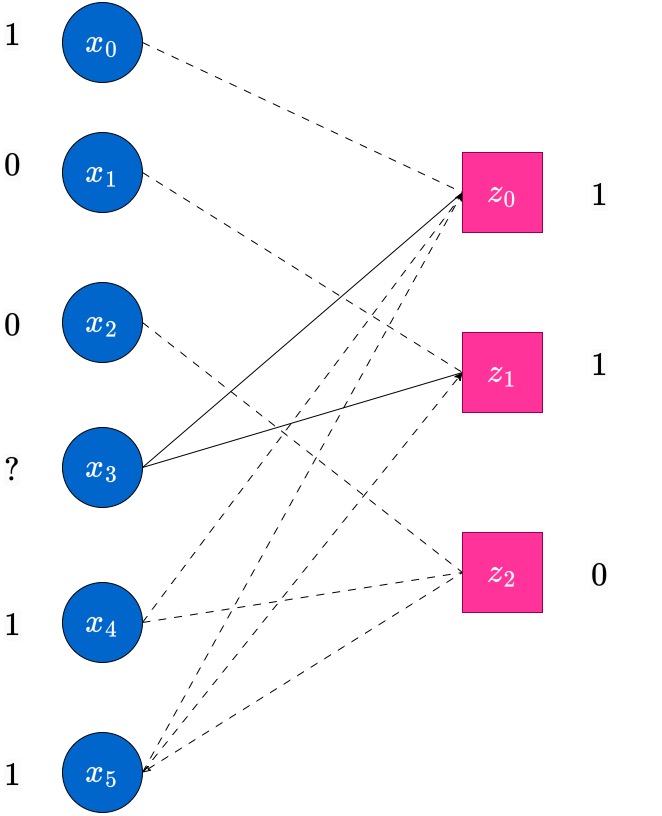

At the end of this round, the edges on which message were passed in this round will no longer be useful. Hence, to indicate this, we blur these edges and draw them as dotted lines. The reader can assume that they are similar to absent edges, at this point. The next figure shows this state of the updated Tanner graph, with the values near the check nodes also updated. This is the completion of the first round of the peeling decoding algorithm.

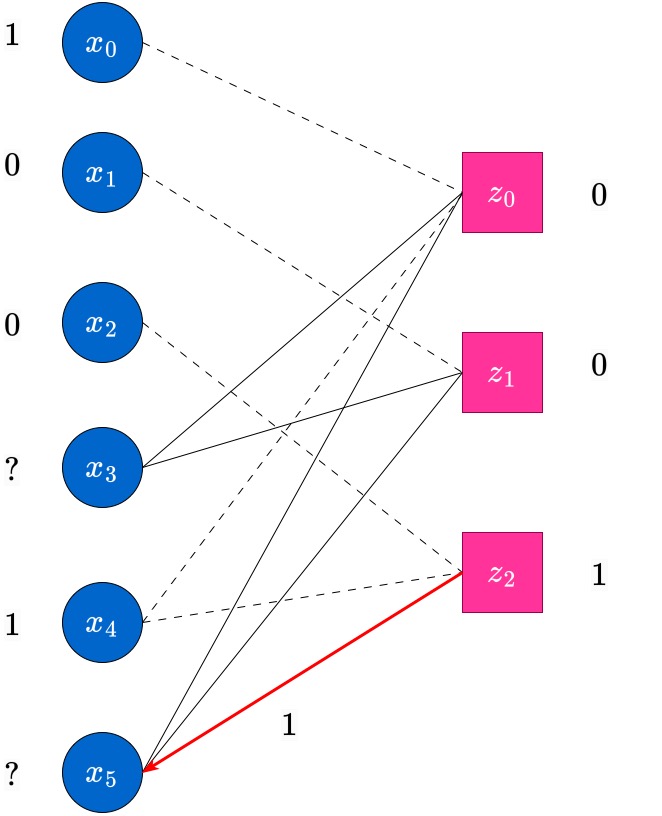

Now, round 2 begins. In this round, messages will be passed from the RHS (Check) nodes which have only a single non-dotted edge incident on them (i.e., degree-1 check nodes). Such check nodes pass message (their current value) to the single variable node which they are connected to. This is indicated in the following figure. In this case, the node has degree and this sends a message to the variable node which it is connected to.

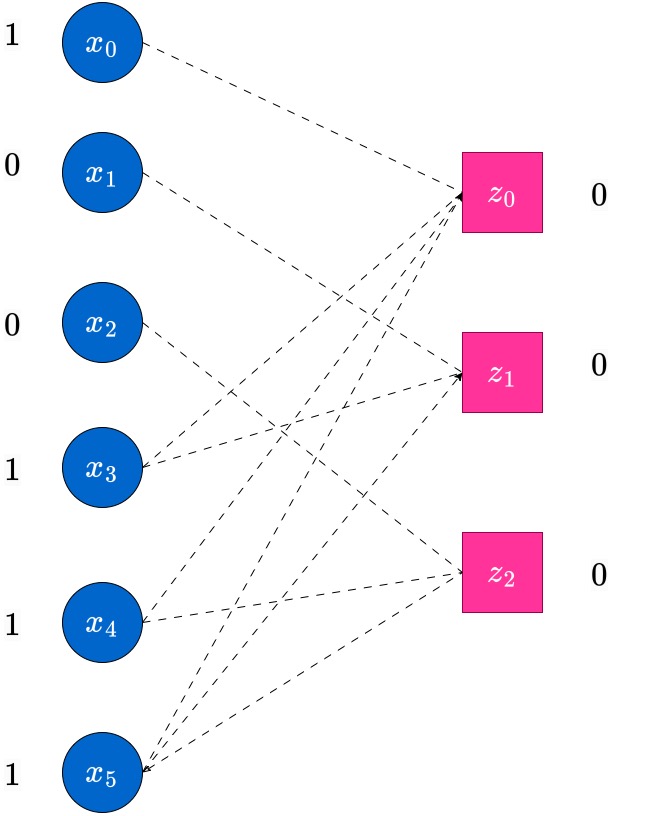

At the end of this round, those erased variable nodes which have received messages from check nodes, update their values. The updated value will be equal to the majority of the incoming messages at the variables. In this case, variable node receives from check node , and this is the updated value of . The edge is now used up, and hence is grayed out , indicated by a dotted-edge now. Further, the check node is also updated to after this round. It will no longer be useful in the decoding process anymore.

The iterations now continue, as before. Messages are passed from variable to check nodes, the check node values are updated and the check nodes with degree pass messages to the variable nodes, decoding bits which were erased hopefully. The reader is encouraged to carefully go over the figures below along with the captions to understand the process of decoding.

An example of partial (or) incomplete decoding.

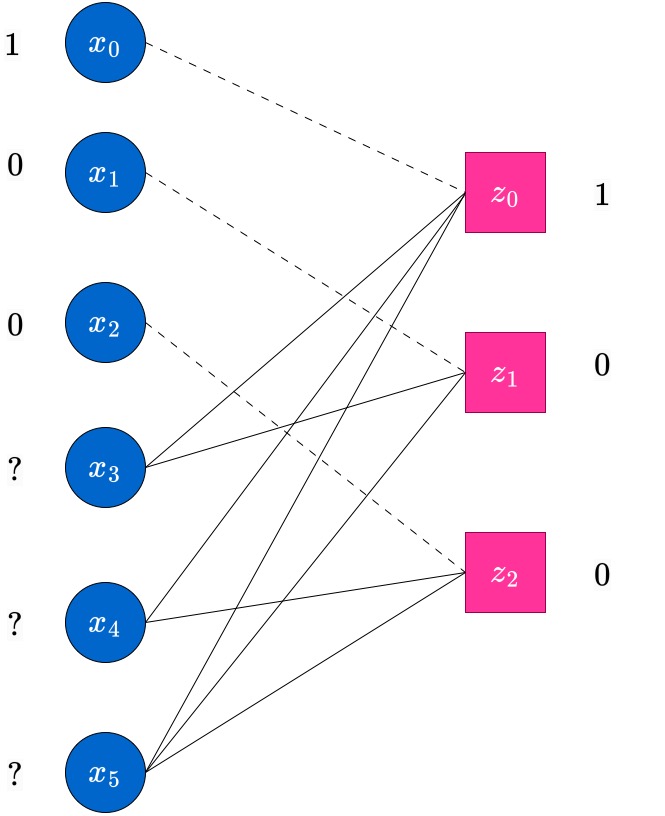

Now, for the same code, we explain one scenario when the decoding is completed only partially by the bit-wise MAP decoder via the peeling decoding, as described through the example above.

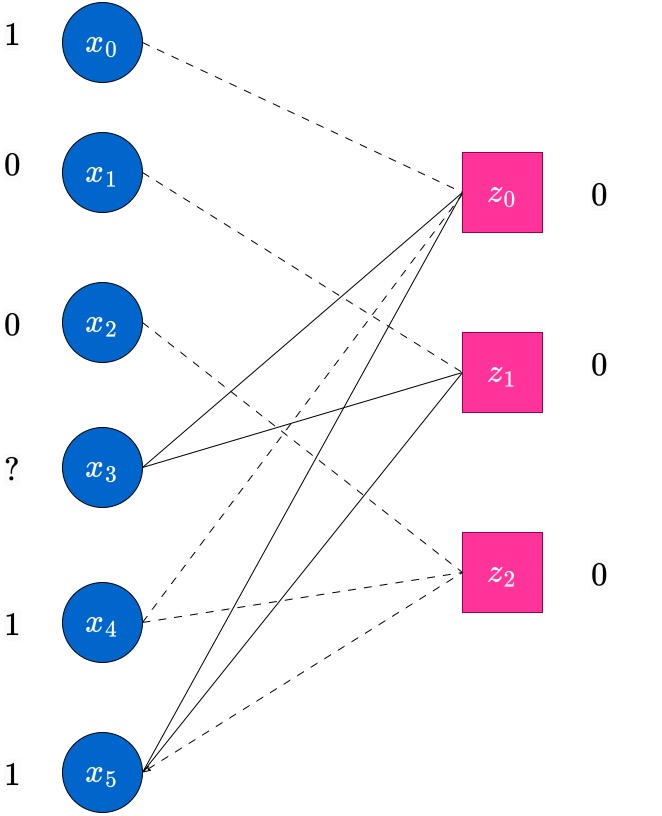

Consider the same transmitted codeword . In this case, we assume the received vector has three erasures. In this case, we display the graph at the end of round of the peeling decoding algorithm, when messages have been passed from the nodes towards their outgoing edges.

Observe that at this point, there is no check node which has degree . Hence the decoder stops here, and we obtain only a partially decoded word (in this case, no additional erased bits have been decoded; in general this may not be the case). Such scenarios do happen in the peeling decoder, which does bit-MAP decoding. The reader has to be aware. However, the block-MAP decoder can actually decode the unique estimate, which happens to be correct, for this example. To see this, observe that there is only one codeword which is compatible with this received vector. The MAP decoder will find this unique estimate, however at higher complexity than the peeling decoder. We do not concern ourselves with this more complex decoder at this point.