Convolutional Codes and Viterbi Decoding

The theory associated with Experiment-5 is divided into two parts:

- Encoding of convolutional codes

- Viterbi decoding

1. Encoding of convolutional codes

In experiment 1, we studied block codes and linear block codes. Convolutional codes are linear codes, but they are not "block" codes. For a block code, the given long stream of input bits is first divided into short message blocks and then a codeword is assigned to each message block. In convolutional codes, the input bitstream is directly given to the encoder, without dividing it into short message blocks. The output of convolutional encoder is the encoded bitstream corresponding to the input bitstream. Another main difference between block codes and convolutional codes is that the convolutional encoder has a "memory". For a convolutional code, the encoded output bit at any given time depends not only on the current input bits but also on previous input bits, hence the encoder is said to have a memory.

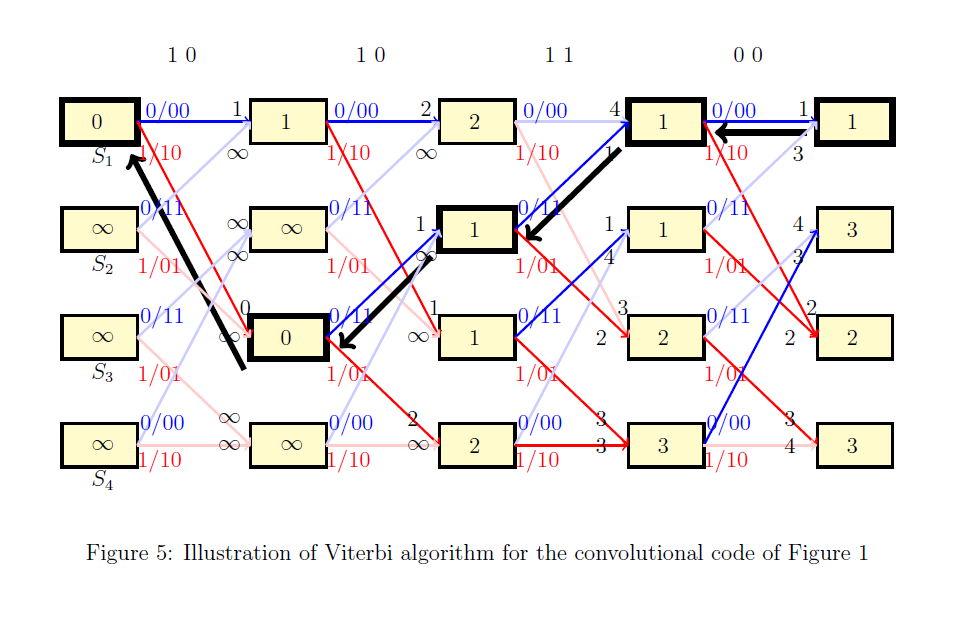

We shall now recall some basics about convolutional encoder. We shall focus on non-systematic feedforeward encoders of rate . Please refer [1], [2] for a detailed discussion about convolutional codes. We shall introduce the basics of convolutional encoder through an example. Throughout this section, we shall focus on the rate convolutional encoder illustrated in Figure~1. As shown, this encoder consists of the system formed by two shift register, two adders, and the corresponding connections. The memory of this encoder is equal to the number of shift registers.

For Figure 1, we have . The input bitstream is denoted by such that at time instant , the bit enters the system. The contents of the shift registers at time are and respectively. For the sake of simplicity we shall assume that at time , the contents of both the shift registers are empty, i.e., bit is stored at both the registers.The output of the encoder consists of two bitstreams denoted by and such that the final encoded bitstream is given by .

Let and denote the connections corresponding to the first and second output of the encoder. In general, each corresponds to the impulse response of the linear system corresponding to the encoder. For a non-systematic feedforeward encoder with memory , each will be a vector of length given by . and are called generator sequences of the encoder. For the encoder illustrated in Figure 1, and are given by

(1)

The -th output bit of output sequence for is given by

... ,

where denotes the convolution operation. From Eq (1), for the encoder illustrated in Figure 1, at any time instance , and are given by

.

It can be seen that the -th output bit depends on previous input bits and hence the encoder is said to have memory . Since the output bit is obtained by taking convolution of the input bitstream and the generator sequence, this code is called as convolutional code.

Observe that for the example of Figure 1, for each input bit , we get two output bits ( and ) and hence the rate of this code is equal to . Similarly one can have rate convolutional code. Note that one can design a convolutional code with any arbitrary rate with . For a rate convolutional code, for input bits we get output bits. In Figure 1, we considered non-systematic feedforeward encoder implementation. One can also have various other encoder implementations. A detailed discussion about these topics can be found in [1] and [2].

1.1 State and trellis diagram of a convolutional code

The operation of a convolutional code can be described conveniently using the state and trellis diagram associated with it. We will continue using example of Figure 1 to explain the basics of state and trellis diagram. Recall that for this example, at any time instant , the input bit is , the contents of the shift registers are and , and the output bits are and .

The state of an encoder is defined as the contents of its shift registers. Let denotes the state at time . Then for Figure 1, is given by

.

It can be seen that can take all possible values () corresponding to all possible values and can take. Suppose the encoder is at state . Then for the input the encoder will jump to to state and the corresponding output bits will be and .

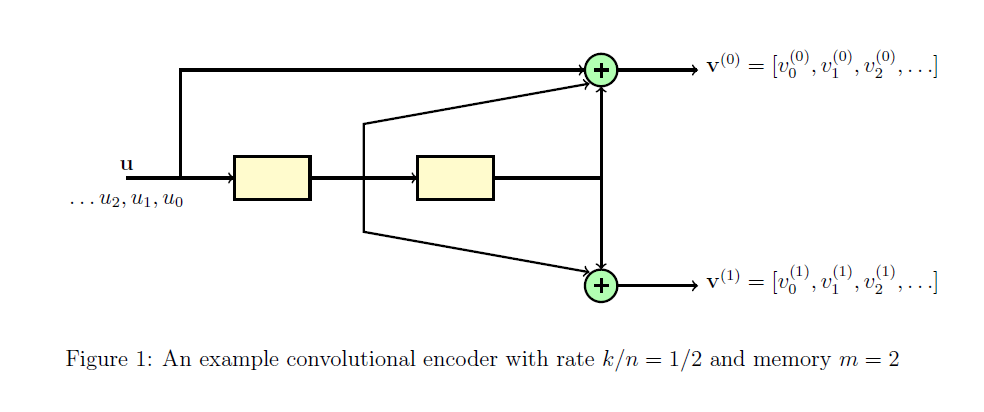

For example, let . Then for input the encoder will jump to state and the corresponding output bits will be and . All possible combinations of states and their transitions corresponding to all possible inputs is compactly represented using the state diagram. The state diagram of the encoder of Figure 1 is illustrated in Figure . In this figure, the transitions corresponding to input are indicated using red color and transitions corresponding to input are indicated using blue color. We shall use this color representation throughout the theory overview.

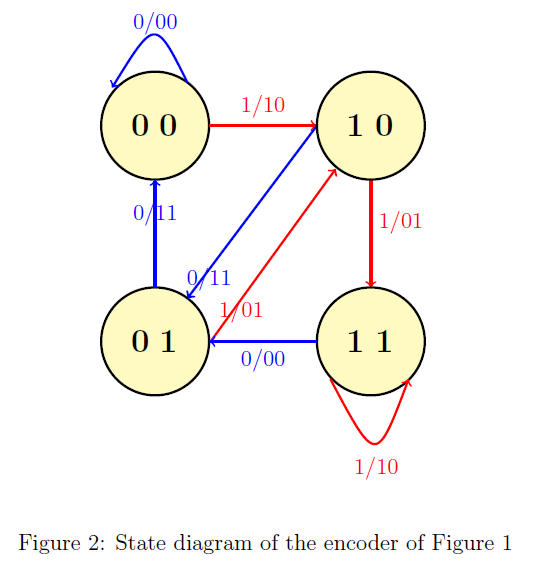

It can be seen that for the given input bitstream, the corresponding encoded output bitstream can be obtained conveniently by considering the transitions of the system from one state to another and noting the corresponding output bits. This time evolution of the state diagram is called as a trellis diagram. For any time instant , and are called as present state and next state respectively.

The trellis diagram corresponding to the state diagram of Figure 2 or three time instances is shown in Figure 3. Suppose . Then for the corresponding the time evolution is illustrated in Figure 3 via thick lines. It can be seen that the output bitstream will be . Note that any path in this trellis corresponds to a valid codeword and vice versa.

2. Viterbi decoding

In this section, we shall study Viterbi algorithm for decoding convolutional codes. This is an optimum, maximum likelihood (ML) decoding algorithm. Recall that in Experiment-4, we studied standard array and syndrome decoding, which was also an ML decoding algorithm. In Experiment-5, we consider the situation when the noise is introduced by the binary symmetric channel with crossover probability , denoted by BSC() and provide a version of Viterbi algorithm that can be applied when noise is introduced by the BSC(). Note that Viterbi algorithm, in general, can be applied other channel models as well (see [1], [2] for details).

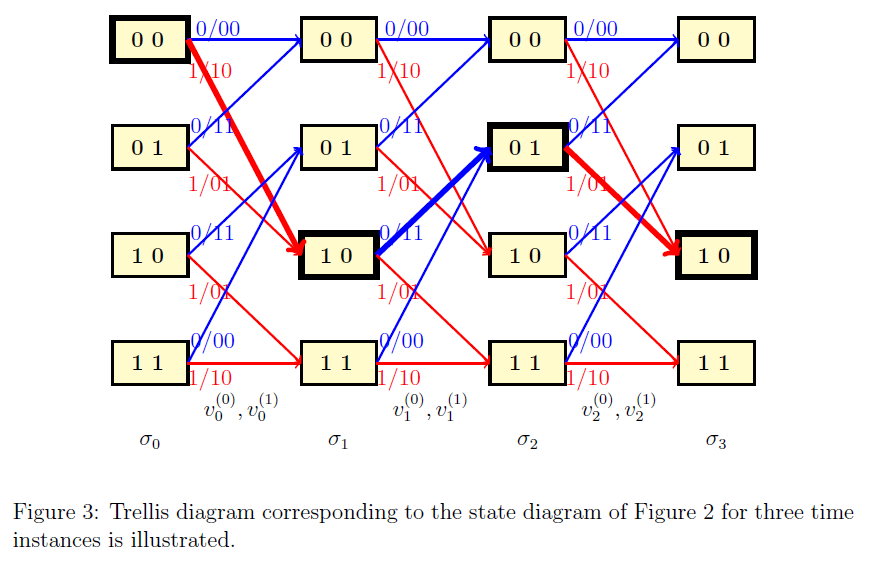

We shall continue using example of Figure~1 to illustrate the Viterbi algorithm. Let us denote the received bitstream by . For decoding this received sequence using Viterbi algorithm, the corresponding trellis with time instances is considered such that the received bits are associated with the -th section of the trellis for (see Figure 3). For the sake of simplicity we assume that the encoder starts at all-zero state. However, note that one can adapt Viterbi algorithm to situation when encoder does not start with all-zero state as well. Let us denote the states and by and respectively.

Viterbi algorithm can be divided into two parts; forward pass and reverse pass. In the forward pass, we move from left to right in the trellis and in the reverse pass we move from right to left. In order to illustrate the steps of Viterbi algorithm, we need to introduce two terminologies; state metric and path metric. As the name indicates, state metric is associated with all possible states at any time instant in the -step trellis diagram. Similarly, path metric is associated with all possible paths from state to state , at any time instant . For the BSC, state and path metrics are some positive integers and their values are updated as the algorithm progresses.

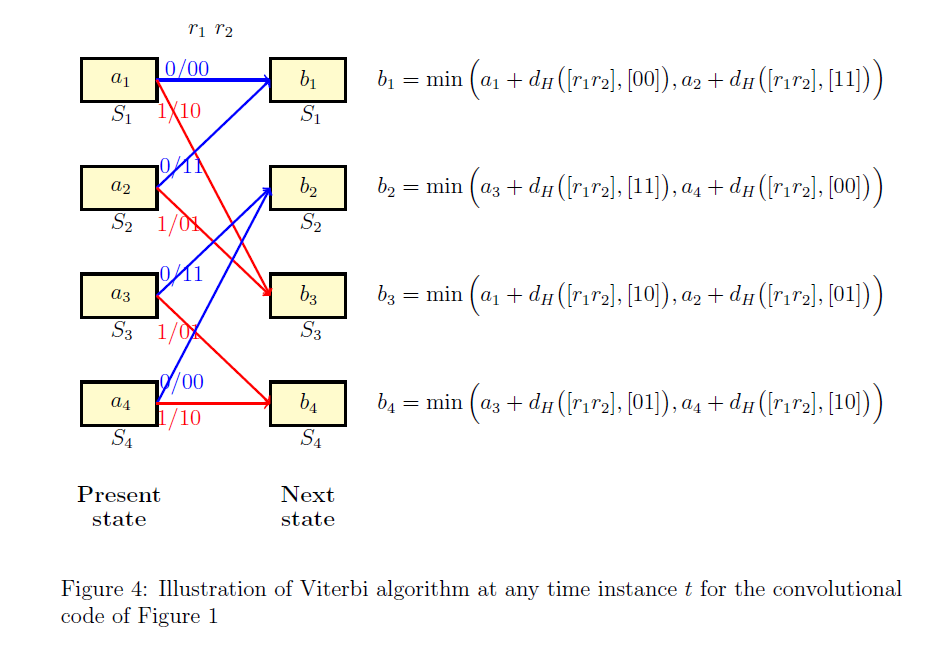

Before providing the detailed steps of the algorithm, let us first focus on the steps of the algorithm at any time instance . Consider the section of the trellis at any time instance as illustrated in Figure 4. For the sake of simplicity of notation, we will not use the subscript in this discussion. Let and be the received bits associated with this section of the trellis.

Observe that in any trellis section, there are total paths from a present state to next state. The path metric associated with any path is equal the Hamming distance between the vector and codebits associated with that path.

Let and be the state metrics associated with the state at present and next time instances respectively, for .

Suppose the state metrics associated with the present states are known. Then the state metrics associated with the next states are computed as illustrated in Figure 4. Observe that at time instant , there are exactly two arriving paths at any state and the computation of corresponds to choosing the path with minimum weight. Further at any next state, discard the arriving path that corresponds to larger value.If there are ties, discard any path arbitrarily.

For example, suppose and . Then will be computed as follows

The path from to is discarded from the trellis since it has a larger weight.

It can be observed from the previous paragraph that, for every state at any time instant , the arriving path with the minimum Hamming weight is chosen. This forms the key idea of the Viterbi algorithm. We are now ready now summarize the steps of Viterbi algorithm.

Forward pass :

- At time instant : The initial metric associated with state is set to and the metrics associated with the remaining states are set to (see Figure 5).

- For time instances : At any time instant , the metric associated with every next state is obtained using the state metrics of the previous states. Detailed steps towards this are described in the previous paragraphs. Further, an arriving path to the given state with larger weight is discarded. In Figure 5, discarded paths are indicated using thin lines. This is done sequentially as we move from left to right till we reach to the end of trellis diagram.

Reverse pass :

- At the end of the trellis, choose the state with the minimum value. For example in Figure 5, state has the minimum value. This is indicated using bold black box. If there are multiple states with the same minimum value, choose any state arbitrarily.

- Starting from a state with the minimum value, trace back along the path from right to left till we reach to the beginning of the trellis. This path corresponds to the decoded codebit stream. Figure 5, this is indicated using thick black lines.