Convergence of Random Variables

Introduction

In the realm of probability theory and statistical inference, it's common to encounter situations where we aim to estimate an unobservable random variable through a sequence of approximations. Suppose we cannot observe directly, but we can perform measurements or experiments to obtain estimates . Each subsequent estimate is derived from additional data or refined methodologies, with the hope that as increases, provides a more accurate approximation of .

This leads us to the concept of convergence: we are interested in understanding whether and how the sequence approaches as . In probability theory, convergence isn't a singular notion but encompasses various types, each capturing a different aspect of how may become "close" to . These include:

- Almost Sure Convergence: converges to with probability 1.

- Convergence in Probability: For any , the probability that approaches zero as .

- Convergence in Distribution: The distribution functions of converge to the distribution function of at all continuity points.

- Mean Square Convergence: The expected value of approaches zero as .

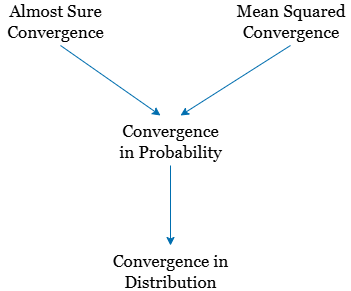

These are all different kinds of convergence. A sequence might converge in one sense but not another. Some of these convergence types are ''stronger'' than others and some are ''weaker.'' By this, we mean the following: If Type A convergence is stronger than Type B convergence, it means that Type A convergence implies Type B convergence. The below figure summarizes how these types of convergence are related. In this figure, the stronger types of convergence are on top and, as we move to the bottom, the convergence becomes weaker. For example, using the figure, we conclude that if a sequence of random variables converges in probability to a random variable , then the sequence converges in distribution to as well.

1. Almost Sure Convergence

Definition

A sequence of random variables converges almost surely to a random variable if:

This means that the sequence converges to for almost every outcome in the sample space. Almost sure convergence implies that, with probability 1, the sequence approaches as . It's akin to saying that the convergence happens for "almost every" individual outcome.

Example

Consider the sequence:

As , for all . Therefore, converges almost surely to 1.

2. Convergence in Probability

Definition

Convergence in probability means that the probability of deviating from by more than becomes negligible as grows. A sequence converges in probability to if, for every :

Example

Define:

Then in probability, since:

As mentioned previously, convergence in probability is stronger than convergence in distribution. That is, if , then . The converse is not necessarily true.

For example, let be a sequence of i.i.d. Bernoulli random variables. Let also be independent from the 's. Then, . However, does not converge in probability to , since is in fact also a Bernoulli random variable, and

A special case in which the converse is true is when , where is a constant. In this case, convergence in distribution implies convergence in probability. We can state the following theorem:

Theorem

If , where is a constant, then .

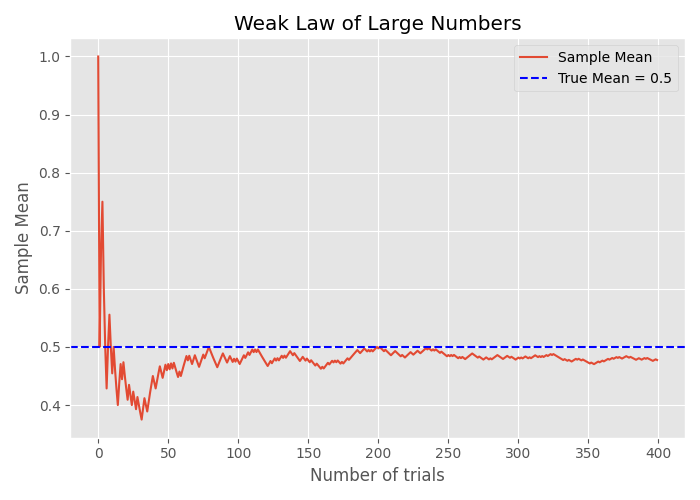

An example of convergence in probability is the weak law of large numbers (WLLN).

3. Convergence in Distribution

Definition

Convergence in distribution focuses on the behavior of the distribution functions. It implies that the distributions of approach the distribution of as . Formally, a sequence converges in distribution to if, for all points where the cumulative distribution function (CDF) is continuous:

Example

Let be a sequence of random variables with the cumulative distribution function:

Then converges in distribution to .

4. Mean-Square Convergence

A sequence of random variables converges to a random variable in mean square (m.s.) if

We often write this as .

5. Relationships Between Different Types of Convergence

As discussed previously, the different types of convergences are related to each other.

- Almost Sure Convergence Convergence in Probability Convergence in Distribution

- Convergence in Norm Convergence in Probability Convergence in Distribution

| Type of Convergence | Notation | Implies |

|---|---|---|

| Almost Sure | Convergence in Probability | |

| In Mean Square | Convergence in Probability | |

| In Probability | Convergence in Distribution | |

| In Distribution | — |

However, the converses do not generally hold.

6. Weak Law of Large Numbers (WLLN)

Statement

Let be i.i.d. random variables with finite mean . Then:

Intuition

The sample average converges in probability to the expected value as the sample size increases.

Example

If , then in probability.